LivekitYOLORAGDepth Estimation

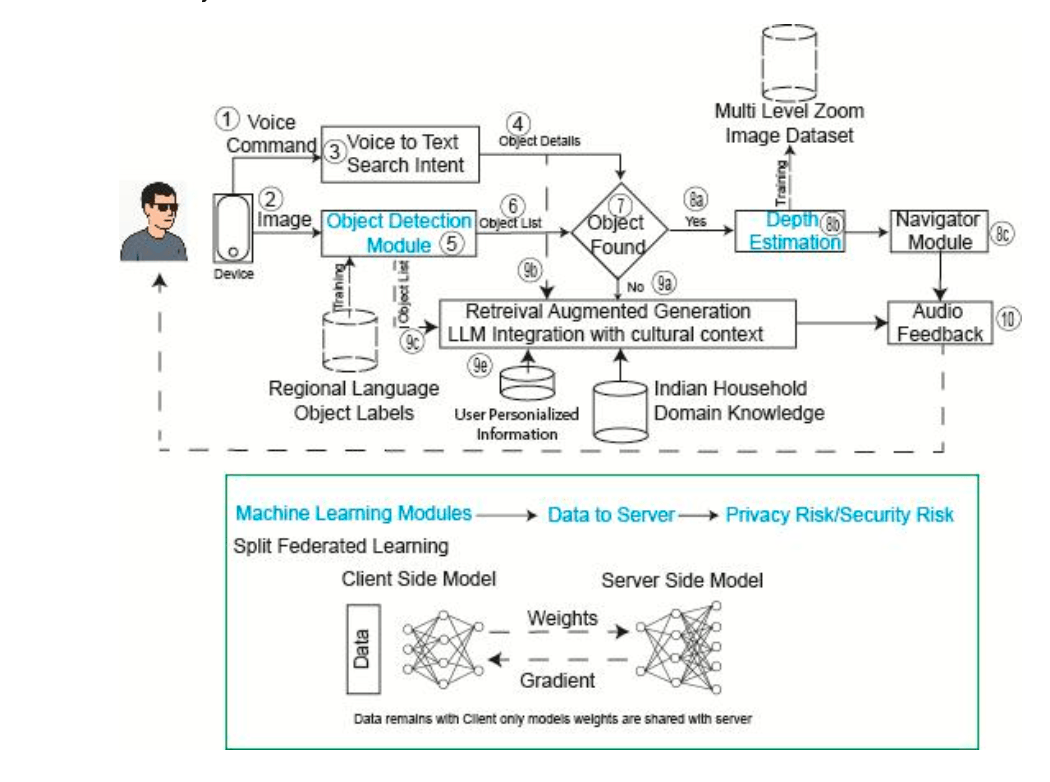

This assistant helps visually impaired users locate indoor objects by coordinating a multi-agent state machine inside the LiveKit ecosystem. Gemini acts as the central reasoning engine, sequencing specialized agents: initial state captures user intent and location; object detection pairs YOLOv11 with SentenceTransformers (MiniLM) for cosine-similarity matching so terms like "mug" map to "cup". On detection, a depth estimation model adds spatial awareness; if the object is missing from frame, a RAG agent queries a structured household knowledge base for contextual location hints. Voice I/O stays low-latency via OpenAI STT and Sarvam TTS.